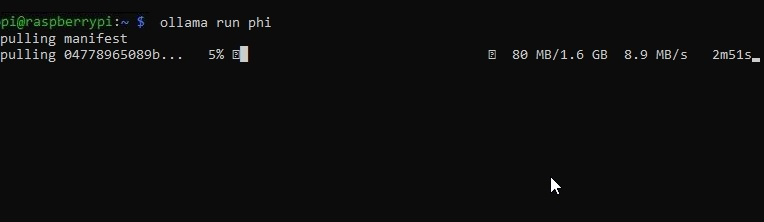

Running Phi4-mini-reasoning on Raspberry Pi opens up exciting possibilities for hobbyists and developers alike, as this compact model is tailored to operate within the constraints of a single-board computer. With 3.8 billion parameters and a modest storage requirement of just 3.2 GB, the Phi4-mini-reasoning serves as a practical entry point for deploying AI models in the community-oriented Raspberry Pi ecosystem. Although performance may not rival that of larger models, its four-bit quantization enables usable AI functionalities right at your fingertips. In a detailed tutorial, [Gary Explains] demonstrates how to integrate this Raspberry Pi LLM into your setup, showcasing its ability to tackle basic reasoning tasks. Whether you’re exploring AI on Raspberry Pi for educational purposes or personal projects, this model embodies the future of compact AI applications in everyday computing.

Exploring the potential of running compact AI models like the Phi4-mini-reasoning on a Raspberry Pi offers a fresh perspective on machine learning in constrained environments. This system’s accessibility allows enthusiasts to harness a smaller yet robust language model with 3.8 billion parameters, making it a practical choice for those interested in experimenting with AI technologies. The approach of deploying a Raspberry Pi LLM not only democratizes access to advanced computing but also highlights the effectiveness of quantization in AI, optimizing resource use without sacrificing performance. Guides from tech influencers like [Gary Explains] further illuminate how integrating such models into simple setups can foster innovative applications and learning experiences. Embracing projects like these can significantly enrich your understanding of artificial intelligence, paving the way for future experiments and advancements.

Introduction to Phi4 LLM and Raspberry Pi Integration

Microsoft’s Phi4 LLM, notable for being a large language model with 14 billion parameters, presents challenges for deployment on devices with limited resources. The impressive size of the original model requires about 11 GB of storage, making it infeasible for smaller computing platforms such as the Raspberry Pi. However, there exists a more manageable alternative called the Phi4-mini-reasoning model, featuring a reduced size with 3.8 billion parameters and approximately 3.2 GB of storage requirement. This makes it a viable candidate for those looking to harness the power of AI on Raspberry Pi, allowing enthusiasts and developers to integrate sophisticated language processing capabilities into their projects without the need for high-end hardware.

The Phi4-mini-reasoning model opens up new possibilities for running AI-driven applications on smaller devices, making advanced language processing more accessible. Not only does it maintain a fair degree of the original model’s functionality, but it also incorporates four-bit quantization to allow for efficient data handling. While performance may not reach the heights of its larger counterpart, the feasibility of running this model on a Raspberry Pi demonstrates the potential for deploying AI models, including Raspberry Pi LLMs, on everyday devices, thereby encouraging innovation in the maker community.

Understanding Quantization in AI Models

Quantization is a vital concept in AI and deep learning, referring to the technique of reducing the precision of the numbers used to represent model parameters. This becomes particularly important for running large models on edge devices like the Raspberry Pi. In the case of Phi4-mini-reasoning, the four-bit quantization means that the model has been optimized to use lower precision in its calculations, which significantly reduces the memory footprint. While this optimization allows the model to fit into the limited resources of a Raspberry Pi, it may not deliver the same performance as high-precision alternatives. Nonetheless, for many practical applications, this trade-off is a worthwhile compromise.

The implications of quantization extend beyond mere storage concerns; they also affect the speed and efficiency of model execution. For instance, running the Phi4-mini-reasoning model on a Raspberry Pi may result in slower processing times compared to a desktop computer with a powerful GPU. Gary’s testing shows this reality in the context of solving simple problems, like the typical “Alice question,” where the processing time on a Raspberry Pi can reach about 10 minutes. Yet, for hobbyists and those exploring AI on Raspberry Pi, this slower speed can be acceptable, especially when budget constraints and accessibility are critical factors.

Performance Expectations of the Phi4-mini Reasoning Model

When evaluating the performance expectations for running the Phi4-mini-reasoning model on devices such as a Raspberry Pi, it is essential to note that the execution speed and response time can vary greatly. While the initial setup on more powerful systems like PCs allows for rapid inference, the limitations inherent in Raspberry Pi hardware mean that users might experience delays. For instance, the 10-minute processing time highlighted in Gary’s demonstration could deter some users but also serves as a valuable learning tool for those wanting to experiment with AI and LLMs on a budget-friendly platform.

Despite its slower processing capabilities, the Phi4-mini-reasoning model still presents an intriguing option for Raspberry Pi users looking to dabble in AI. This sort of deployment allows enthusiasts to experiment with natural language processing, understand how AI models operate, and even fine-tune their applications for specific use cases. Users might find creative ways to leverage the model, from building chatbots to crafting interactive educational tools, ultimately pushing the boundaries of what can be achieved with deployed AI models on compact hardware.

Alternatives to Phi4 LLM for Raspberry Pi

While the Phi4-mini-reasoning model is a strong contender for those seeking to implement AI on Raspberry Pi, it’s important to explore alternatives that may suit different needs and capabilities. There are several smaller models available in the open-source community that can operate efficiently on limited hardware. These models often come with fewer parameters, thus requiring less storage and computation power while still providing useful language processing features. Whether it’s addressing simple Q&A tasks or providing assistance in more straightforward applications, these alternative models can be a great fit.

Moreover, many of these alternatives offer flexibility for developers, allowing them to tailor the model to specific applications or user requirements. Smaller models might employ similar quantization techniques and optimizations to maximize performance on low-resource devices, making them excellent choices for Raspberry Pi projects. Exploring these alternatives gives developers a broader toolkit when integrating AI capabilities into their creations, fostering creativity and innovation within the community.

Exploring Practical Applications for AI on Raspberry Pi

Implementing AI on Raspberry Pi opens a vast range of potential applications that can enhance everyday projects. Users can develop chatbots capable of interactive dialogues, virtual assistants that respond to voice commands, or educational tools that simplify complex subjects through personalized learning experiences. The ability to run the Phi4-mini-reasoning model on such compact hardware signifies the shift towards democratized access to advanced AI technologies, where even amateurs can create intelligent applications without needing extensive computational resources.

As developers experiment with AI on Raspberry Pi, they can uncover not just novel applications but also refine their understanding of AI models, including aspects like quantization and model efficiency. Projects could involve building language translators, content recommendation systems, or sentiment analysis tools, showcasing how accessible technology can spur inventive solutions in various sectors. Ultimately, running AI models on Raspberry Pi not only serves as a platform for experimentation but also contributes to significant developments in potential user-centered technologies.

Challenges of Running AI Models on Low Resource Devices

Deploying AI models, especially large ones like LLMs, on low resource devices like the Raspberry Pi comes with its fair share of challenges. One of the most significant barriers is the limited computational power available. While hardware advancements are continuously made, the Raspberry Pi still lags in performance compared to high-end computers with dedicated graphics processing units. This limitation is particularly pronounced when attempting to run sophisticated models that demand substantial processing capabilities.

Moreover, the aspect of memory management plays a crucial role when running AI models on these devices. Users need to be well-versed in optimizing resource allocation and managing model size through techniques such as quantization. While Phi4-mini-reasoning can serve its purpose even on a Raspberry Pi, the learning curve involved in optimizing these models and understanding their limitations could pose additional hurdles for those new to AI development.

Is the Investment in Raspberry Pi AI Projects Worth It?

The question of whether investing in AI projects on platforms like Raspberry Pi is worthwhile largely depends on the user’s objectives and expectations. For hobbyists and educators, utilizing the Phi4-mini-reasoning model or similar AI tools can serve as an engaging introduction to natural language processing. The experience gained from exploring deployment strategies, troubleshooting model performance, and understanding model intricacies can be invaluable, laying a strong foundation for future projects.

Furthermore, the cost-effectiveness associated with using Raspberry Pi comes into play. With affordable hardware and a plethora of open-source resources, users can experiment with deploying AI without the hefty budget often associated with traditional computing setups. Even if the outcomes are not perfect or instantaneous, the benefits of hands-on learning and the potential for innovative applications often far outweigh the initial time and resource investments.

Community and Support for AI Development on Raspberry Pi

Community support plays a vital role in the development and implementation of AI models on Raspberry Pi. Enthusiasts can tap into online forums, user groups, and social media platforms to share their experiences and seek guidance. Platforms like GitHub provide repositories of code and documentation necessary for deploying AI models, ensuring that developers are not working in isolation. This collaborative spirit also encourages knowledge-sharing regarding challenges faced, such as tweaking models to suit low-resource environments.

Moreover, having access to a strong community allows newcomers to learn from the successes and failures of others. Whether it’s advice on optimal quantization techniques, performance tuning, or exploring alternative models, the Raspberry Pi and AI communities are rich resources for anyone looking to enhance their projects. As interest in AI continues to grow, the support networks surrounding these initiatives are expected to evolve, leading to even greater advancements and accessibility for all.

Future Prospects for AI Models on Raspberry Pi

Looking forward, the integration of AI models on Raspberry Pi devices represents an exciting frontier for technological advancements. As the capabilities of AI models continue to expand, so too does the potential for deploying increasingly sophisticated applications on compact, affordable hardware. Innovations in quantization, model compression, and processing efficiency will likely lead to more robust LLM alternatives suited for Raspberry Pi, empowering developers to push the boundaries of creativity and functionality in their projects.

Additionally, as more developers realize the implications of running LLMs on accessible platforms like the Raspberry Pi, we can expect a surge in innovative applications tailored for diverse sectors, ranging from education to entertainment. This collaborative evolution in technology not only fosters community engagement but also drives the collective goal of democratizing AI and making it an integral part of everyday life.

Frequently Asked Questions

What are the requirements for running Phi4-mini-reasoning on Raspberry Pi?

To run the Phi4-mini-reasoning model on a Raspberry Pi, you need a device with at least 3.2 GB of available storage and a minimum of 4 cores with 8GB of RAM, such as the Raspberry Pi 5. Additionally, implementing four-bit quantization can help in managing the model’s parameters more efficiently.

Can I run the Phi4-mini-reasoning model on Raspberry Pi without any prior technical knowledge?

While deploying AI models like Phi4-mini-reasoning on Raspberry Pi is feasible, some technical knowledge is helpful. You’ll need to understand basic model deployment and possibly install necessary dependencies. However, helpful tutorials like those from Gary Explains can guide you through the process.

How does quantization in AI improve the performance of Phi4-mini-reasoning on Raspberry Pi?

Quantization in AI, particularly in the case of Phi4-mini-reasoning, reduces the size of the model parameters from floating-point numbers to lower precision, like four bits. This not only conserves storage on devices like Raspberry Pi but also speeds up processing, although performance is still limited compared to higher-spec hardware.

What kind of tasks can I expect Phi4-mini-reasoning to handle on my Raspberry Pi?

The Phi4-mini-reasoning model can handle simple reasoning tasks and basic question answering. For example, it is capable of processing logical queries similar to the ‘Alice question’ benchmark. However, due to the Raspberry Pi’s limited hardware capabilities, expect slower performance compared to more powerful setups.

Is it worth the effort to run AI on Raspberry Pi using Phi4-mini-reasoning?

Running AI on Raspberry Pi with models like Phi4-mini-reasoning can be an interesting experiment for hobbyists and learners. While the performance may not be stellar, it provides invaluable hands-on experience in deploying AI on low-power devices, and exploring such projects can be rewarding in understanding AI application in real-world scenarios.

What alternatives are there to Phi4-mini-reasoning for AI on Raspberry Pi?

If Phi4-mini-reasoning’s performance doesn’t meet your needs, consider exploring other smaller AI models available for deployment on Raspberry Pi. These models may have fewer parameters and optimized performance, making them more suitable for various applications in embedded systems.

How long does it take to run Phi4-mini-reasoning on Raspberry Pi?

Running the Phi4-mini-reasoning model on a Raspberry Pi 5 generally takes about 10 minutes to process a question like the ‘Alice question.’ The execution time can vary based on the complexity of the tasks and the model’s parameters, particularly on devices with lower specifications.

What are the limitations of running Phi4-mini-reasoning on Raspberry Pi?

The main limitations include slow processing times and reduced performance due to hardware constraints. Additionally, the complexity of tasks this model can handle is limited compared to larger LLMs, meaning that more involved queries might not yield satisfactory responses.

How can I find tutorials for deploying Phi4-mini-reasoning on Raspberry Pi?

You can find helpful tutorials for deploying Phi4-mini-reasoning on Raspberry Pi on content platforms like YouTube or personal tech blogs. For instance, Gary Explains has demonstrated the entire setup process, making it easier for beginners to follow along and get started.

What is the significance of using a Raspberry Pi for running LLMs like Phi4-mini-reasoning?

Using a Raspberry Pi to run LLMs like Phi4-mini-reasoning highlights the ability to implement AI on compact, energy-efficient hardware. It showcases potential applications for AI in everyday devices and enables developers to experiment with AI without the need for more expensive computing resources.

| Key Point | Details |

|---|---|

| Phi4 LLM Size | 14 billion parameters, 11 GB storage |

| Phi4-mini-reasoning Model | 3.8 billion parameters, 3.2 GB storage |

| Performance on Raspberry Pi | Takes about 10 minutes to process specific questions on a Raspberry Pi 5 |

| Quantization Definition | Refers to how weights are stored; impacts model performance |

| Benchmark Question | Example question used for performance testing: ‘How many sisters does Alice’s brother have?’ |

| Alternative Models | Other smaller models available for those seeking alternatives to Phi4 |

Summary

Running Phi4-mini-reasoning on Raspberry Pi is an intriguing endeavor for tech enthusiasts and developers alike. The Phi4-mini model, with its 3.8 billion parameters, offers a more practical solution for Raspberry Pi users, requiring significantly less storage than the full Phi4 LLM. While performance might not rival that of higher-end systems, the ability to run basic reasoning tasks showcases the flexibility and potential of smaller models in real-world applications. As seen in practical demonstrations, this model can indeed process questions, albeit at a leisurely pace. Thus, for those interested in experimenting with AI on Raspberry Pi, the Phi4-mini-reasoning provides a noteworthy entry point into the world of large language models.